How do web servers process multiple requests at the same time?

In this article I explore the most common way on how the backend servers handle a lot of connections to clients at the same time.

But first, a conversation between me and the frontend...

Me : How are things with you these days Frontend? Heard you were having some trouble with the Backend?

Frontend :Yeah its all good now. I was unhappy with Backend for some time now. The response times were a bit slow, but things have been a bit better ever since I started using the "keep-alive" header correctly.

Me : Oh nice! What is this "keep-alive" header?

Frontend : Its a header which tells the server how long I want to keep the connection to it alive. Since I communicate with the server over HTTP which itself works on top of TCP, I have to establish a TCP connection first which involves some heavy stuff - DNS Lookup, Three-way handshake and TLS Handshake. Previously I was doing these HTTP requests without using the "keep-alive" header and the connection was getting closed after every request, increasing the latency.

A bit of networking...

Basically closing a TCP connection after every request is the same as cutting the call after saying something and then making another call to say the next thing.

Let's dive a bit deeper, but first let's go through some networking concepts.

Sockets are basically networking interfaces, you can think of them as the combination of the IP address and the port number. So establishing a TCP connection between the client and the server can be visualised as connecting a port from the client device to the port 80 or 443 on the backend server.

TCP Connection can be seen as a stream. Something like the IO Stream on Java. Suppose our backend server is running on port 8080, what this means is that it is listening for TCP connections on this port. After accepting a connection what the server gets from the OS is a file descriptor. We can now visualise a TCP connection as a file, where we can read and write from the file stream.

Listening for a connection is a non blocking system call, whereas accepting a connection is blocking. Basically all incoming connections get queued by the

listen()while waiting foraccept()to be called on each of these connections one by one. This is very similar topush()andpoll()on a queue data structure.

And now the backend enters ...

Me : Looks like you are getting really popular these days! So how are you handling that many devices sending you all those requests at the same time !?

Backend : Well my servers got a herd of helpers with them. They call it the thread pool. Each one of those threads in that pool can process a request at same time. That's how I manage all that load coming in!

Me : But don't you have a limit on the number of threads that can run at the same time? And won't that be too many threads if each of these threads are getting assigned a connection?

Backend : Haha! It's not possible to serve to that many clients if I have to assign a thread for each of those connections. By being Event-Driven I don't have to assign a thread and I only need to respond when there is some kind of activity on a connection ; )

The Problem

As we saw earlier, accept() is a blocking system call, which means that for accept() to be called again, the previous call should have returned.

Suppose the backend is getting multiple GET calls from different clients. Surely you wouldn't want to handle all these GET calls one by one. It also needs to keep this connection open if there is a "keep-alive" header in these requests.

This problem can be easily solved by assigning a thread for each of these requests. These threads will work in parallel freeing up server to accept more connections while these requests are being processed.

We keep the thread running for as long as the connection is alive, listening on the connection socket for any further requests.

But now even if the server doesn't have to handle 10k requests at the same time, due to keep-alive headers, they need to maintain a lot more connections. And the server just can't have that many threads running since it is limited on the number of cores.

The Solution - Event Driven Architecture (Nginx Internals)

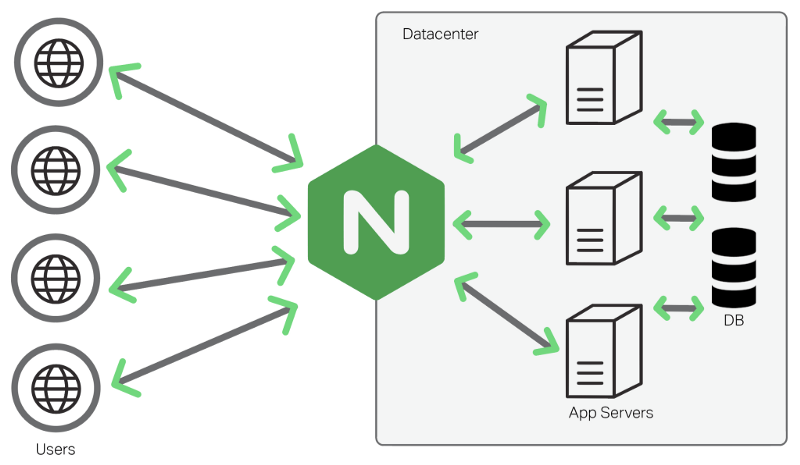

Nginx works like a load balancer, as a proxy for the backend servers and routes the incoming requests to the upstream app servers. It also solves the problem described above.

Nginx got popular because of how efficiently it was able to solve the C10k problem. C10k problem is the problem of handling 10k TCP connections at the same time. Let's dig a bit deeper to see how Nginx handles that many connections.

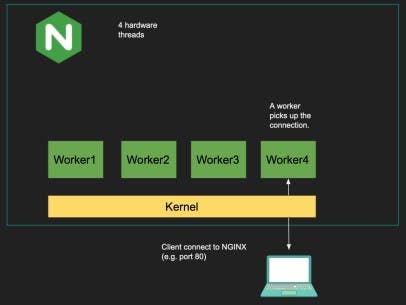

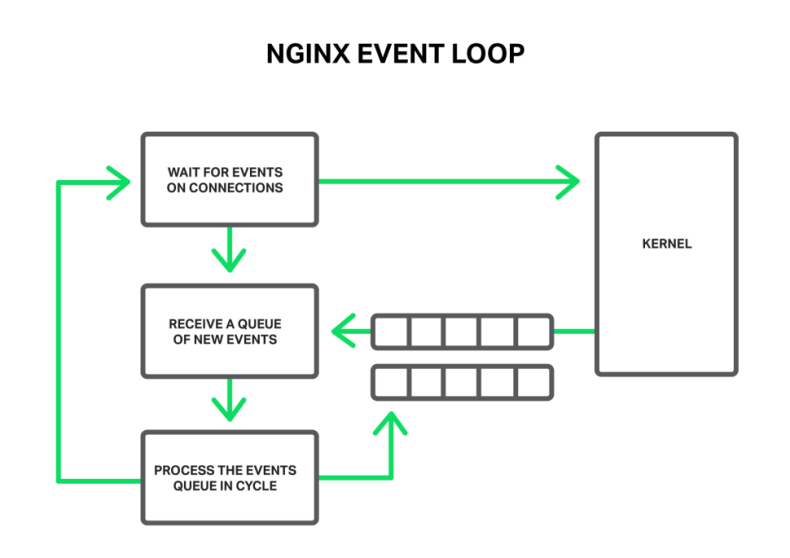

When the Nginx application starts it creates a bunch of processes - Master process, Cache processes and Worker processes. These worker processes handle all the incoming connections. And instead of creating a thread per connection, the worker processes listens for events on these connections.

The number of worker processes is usually equal to the number of CPU cores. And each of these worker process compete for the incoming connections.

Each of these worker process has its own list of open connections. It polls over all the open connections assigned to it i.e it checks each connection one by one, checking for anything new available on this stream. It does this in a non-blocking way with the help of methods like epoll and kqueue.

If it finds anything new an event is triggered and this event is routed to the appropriate app server. Once the app server responds another event gets triggered to write to the connection with the client.

In this way the worker thread is busy only when there is some request to be processed. This is also faster since each worker thread has a priority over the CPU cores leading to reduced context-switching.

Now, our resource limited multi-threaded app servers only needs to have TCP connections with the Nginx proxying it. Nginx deals the problem of handling multiple connections, freeing up our app server to process the requests without worrying about too many connections.

Conclusion

We saw how by the means of Event-Driven Architecture, multi-threaded servers can maintain multiple connections.The above way of handling connections has now been integrated into traditional web servers. For example - Tomcat NIO.